spaCy is a robust open-source library for Python, ideal for natural language processing (NLP) tasks. It offers built-in capabilities for tokenization, dependency parsing, and named-entity recognition, making it a popular choice for processing and analyzing text. With spaCy, you can efficiently represent unstructured text in a computer-readable format, enabling automation of text analysis and extraction of meaningful insights.

By the end of this tutorial, you’ll understand that:

- You can use spaCy for natural language processing tasks such as part-of-speech tagging, and named-entity recognition.

- spaCy is often preferred over NLTK for production environments due to its performance and modern design.

- spaCy provides integration with transformer models, such as BERT.

- You handle tokenization in spaCy by breaking text into tokens using its efficient built-in tokenizer.

- Dependency parsing in spaCy helps you understand grammatical structures by identifying relationships between headwords and dependents.

Unstructured text is produced by companies, governments, and the general population at an incredible scale. It’s often important to automate the processing and analysis of text that would be impossible for humans to process. To automate the processing and analysis of text, you need to represent the text in a format that can be understood by computers. spaCy can help you do that.

If you’re new to NLP, don’t worry! Before you start using spaCy, you’ll first learn about the foundational terms and concepts in NLP. You should be familiar with the basics in Python, though. The code in this tutorial contains dictionaries, lists, tuples, for loops, comprehensions, object oriented programming, and lambda functions, among other fundamental Python concepts.

Free Source Code: Click here to download the free source code that you’ll use for natural language processing (NLP) in spaCy.

Introduction to NLP and spaCy

NLP is a subfield of artificial intelligence, and it’s all about allowing computers to comprehend human language. NLP involves analyzing, quantifying, understanding, and deriving meaning from natural languages.

Note: Currently, the most powerful NLP models are transformer based. BERT from Google and the GPT family from OpenAI are examples of such models.

Since the release of version 3.0, spaCy supports transformer based models. The examples in this tutorial are done with a smaller, CPU-optimized model. However, you can run the examples with a transformer model instead. All Hugging Face transformer models can be used with spaCy.

NLP helps you extract insights from unstructured text and has many use cases, such as:

spaCy is a free, open-source library for NLP in Python written in Cython. It’s a modern, production-focused NLP library that emphasizes speed, streamlined workflows, and robust pretrained models. spaCy is designed to make it easy to build systems for information extraction or general-purpose natural language processing.

Another popular Python NLP library that you may have heard of is Python’s Natural Language Toolkit (NLTK). NLTK is a classic toolkit that’s widely used in research and education. It offers an extensive range of algorithms and corpora but with less emphasis on high-throughput performance than spaCy.

In this tutorial, you’ll learn how to work with spaCy, which is a great choice for building production-ready NLP applications.

Installation of spaCy

In this section, you’ll install spaCy into a virtual environment and then download data and models for the English language.

You can install spaCy using pip, a Python package manager. It’s a good idea to use a virtual environment to avoid depending on system-wide packages. To learn more about virtual environments and pip, check out Using Python’s pip to Manage Your Projects’ Dependencies and Python Virtual Environments: A Primer.

First, you’ll create a new virtual environment, activate it, and install spaCy. Select your operating system below to learn how:

With spaCy installed in your virtual environment, you’re almost ready to get started with NLP. But there’s one more thing you’ll have to install:

(venv) $ python -m spacy download en_core_web_sm

There are various spaCy models for different languages. The default model for the English language is designated as en_core_web_sm. Since the models are quite large, it’s best to install them separately—including all languages in one package would make the download too massive.

Once the en_core_web_sm model has finished downloading, open up a Python REPL and verify that the installation has been successful:

>>> import spacy

>>> nlp = spacy.load("en_core_web_sm")

If these lines run without any errors, then it means that spaCy was installed and that the models and data were successfully downloaded. You’re now ready to dive into NLP with spaCy!

The Doc Object for Processed Text

In this section, you’ll use spaCy to deconstruct a given input string, and you’ll also read the same text from a file.

First, you need to load the language model instance in spaCy:

>>> import spacy

>>> nlp = spacy.load("en_core_web_sm")

>>> nlp

<spacy.lang.en.English at 0x291003a6bf0>

The load() function returns a Language callable object, which is commonly assigned to a variable called nlp.

To start processing your input, you construct a Doc object. A Doc object is a sequence of Token objects representing a lexical token. Each Token object has information about a particular piece—typically one word—of text. You can instantiate a Doc object by calling the Language object with the input string as an argument:

>>> introduction_doc = nlp(

... "This tutorial is about Natural Language Processing in spaCy."

... )

>>> type(introduction_doc)

spacy.tokens.doc.Doc

>>> [token.text for token in introduction_doc]

['This', 'tutorial', 'is', 'about', 'Natural', 'Language',

'Processing', 'in', 'spaCy', '.']

In the above example, the text is used to instantiate a Doc object. From there, you can access a whole bunch of information about the processed text.

For instance, you iterated over the Doc object with a list comprehension that produces a series of Token objects. On each Token object, you called the .text attribute to get the text contained within that token.

You won’t typically be copying and pasting text directly into the constructor, though. Instead, you’ll likely be reading it from a file:

>>> import pathlib

>>> file_name = "introduction.txt"

>>> introduction_doc = nlp(pathlib.Path(file_name).read_text(encoding="utf-8"))

>>> print ([token.text for token in introduction_doc])

['This', 'tutorial', 'is', 'about', 'Natural', 'Language',

'Processing', 'in', 'spaCy', '.', '\n']

In this example, you read the contents of the introduction.txt file with the .read_text() method of the pathlib.Path object. Since the file contains the same information as the previous example, you’ll get the same result.

Sentence Detection

Sentence detection is the process of locating where sentences start and end in a given text. This allows you to you divide a text into linguistically meaningful units. You’ll use these units when you’re processing your text to perform tasks such as part-of-speech (POS) tagging and named-entity recognition, which you’ll come to later in the tutorial.

In spaCy, the .sents property is used to extract sentences from the Doc object. Here’s how you would extract the total number of sentences and the sentences themselves for a given input:

>>> about_text = (

... "Gus Proto is a Python developer currently"

... " working for a London-based Fintech"

... " company. He is interested in learning"

... " Natural Language Processing."

... )

>>> about_doc = nlp(about_text)

>>> sentences = list(about_doc.sents)

>>> len(sentences)

2

>>> for sentence in sentences:

... print(f"{sentence[:5]}...")

...

Gus Proto is a Python...

He is interested in learning...

In the above example, spaCy is correctly able to identify the input’s sentences. With .sents, you get a list of Span objects representing individual sentences. You can also slice the Span objects to produce sections of a sentence.

You can also customize sentence detection behavior by using custom delimiters. Here’s an example where an ellipsis (...) is used as a delimiter, in addition to the full stop, or period (.):

>>> ellipsis_text = (

... "Gus, can you, ... never mind, I forgot"

... " what I was saying. So, do you think"

... " we should ..."

... )

>>> from spacy.language import Language

>>> @Language.component("set_custom_boundaries")

... def set_custom_boundaries(doc):

... """Add support to use `...` as a delimiter for sentence detection"""

... for token in doc[:-1]:

... if token.text == "...":

... doc[token.i + 1].is_sent_start = True

... return doc

...

>>> custom_nlp = spacy.load("en_core_web_sm")

>>> custom_nlp.add_pipe("set_custom_boundaries", before="parser")

>>> custom_ellipsis_doc = custom_nlp(ellipsis_text)

>>> custom_ellipsis_sentences = list(custom_ellipsis_doc.sents)

>>> for sentence in custom_ellipsis_sentences:

... print(sentence)

...

Gus, can you, ...

never mind, I forgot what I was saying.

So, do you think we should ...

For this example, you used the @Language.component("set_custom_boundaries") decorator to define a new function that takes a Doc object as an argument. The job of this function is to identify tokens in Doc that are the beginning of sentences and mark their .is_sent_start attribute to True. Once done, the function must return the Doc object again.

Then, you can add the custom boundary function to the Language object by using the .add_pipe() method. Parsing text with this modified Language object will now treat the word after an ellipse as the start of a new sentence.

Tokens in spaCy

Building the Doc container involves tokenizing the text. The process of tokenization breaks a text down into its basic units—or tokens—which are represented in spaCy as Token objects.

As you’ve already seen, with spaCy, you can print the tokens by iterating over the Doc object. But Token objects also have other attributes available for exploration. For instance, the token’s original index position in the string is still available as an attribute on Token:

>>> import spacy

>>> nlp = spacy.load("en_core_web_sm")

>>> about_text = (

... "Gus Proto is a Python developer currently"

... " working for a London-based Fintech"

... " company. He is interested in learning"

... " Natural Language Processing."

... )

>>> about_doc = nlp(about_text)

>>> for token in about_doc:

... print (token, token.idx)

...

Gus 0

Proto 4

is 10

a 13

Python 15

developer 22

currently 32

working 42

for 50

a 54

London 56

- 62

based 63

Fintech 69

company 77

. 84

He 86

is 89

interested 92

in 103

learning 106

Natural 115

Language 123

Processing 132

. 142

In this example, you iterate over Doc, printing both Token and the .idx attribute, which represents the starting position of the token in the original text. Keeping this information could be useful for in-place word replacement down the line, for example.

spaCy provides various other attributes for the Token class:

>>> print(

... f"{"Text with Whitespace":22}"

... f"{"Is Alphanumeric?":15}"

... f"{"Is Punctuation?":18}"

... f"{"Is Stop Word?"}"

... )

>>> for token in about_doc:

... print(

... f"{str(token.text_with_ws):22}"

... f"{str(token.is_alpha):15}"

... f"{str(token.is_punct):18}"

... f"{str(token.is_stop)}"

... )

...

Text with Whitespace Is Alphanum? Is Punctuation? Is Stop Word?

Gus True False False

Proto True False False

is True False True

a True False True

Python True False False

developer True False False

currently True False False

working True False False

for True False True

a True False True

London True False False

- False True False

based True False False

Fintech True False False

company True False False

. False True False

He True False True

is True False True

interested True False False

in True False True

learning True False False

Natural True False False

Language True False False

Processing True False False

. False True False

In this example, you use f-string formatting to output a table accessing some common attributes from each Token in Doc:

.text_with_wsprints the token text along with any trailing space, if present..is_alphaindicates whether the token consists of alphabetic characters or not..is_punctindicates whether the token is a punctuation symbol or not..is_stopindicates whether the token is a stop word or not. You’ll be covering stop words a bit later in this tutorial.

As with many aspects of spaCy, you can also customize the tokenization process to detect tokens on custom characters. This is often used for hyphenated words such as London-based.

To customize tokenization, you need to update the tokenizer property on the callable Language object with a new Tokenizer object.

To see what’s involved, imagine you had some text that used the @ symbol instead of the usual hyphen (-) as an infix to link words together. So, instead of London-based, you had London@based:

>>> custom_about_text = (

... "Gus Proto is a Python developer currently"

... " working for a London@based Fintech"

... " company. He is interested in learning"

... " Natural Language Processing."

... )

>>> print([token.text for token in nlp(custom_about_text)[8:15]])

['for', 'a', 'London@based', 'Fintech', 'company', '.', 'He']

In this example, the default parsing read the London@based text as a single token, but if you used a hyphen instead of the @ symbol, then you’d get three tokens.

To include the @ symbol as a custom infix, you need to build your own Tokenizer object:

1>>> import re

2>>> from spacy.tokenizer import Tokenizer

3

4>>> custom_nlp = spacy.load("en_core_web_sm")

5>>> prefix_re = spacy.util.compile_prefix_regex(

6... custom_nlp.Defaults.prefixes

7... )

8>>> suffix_re = spacy.util.compile_suffix_regex(

9... custom_nlp.Defaults.suffixes

10... )

11

12>>> custom_infixes = [r"@"]

13

14>>> infix_re = spacy.util.compile_infix_regex(

15... list(custom_nlp.Defaults.infixes) + custom_infixes

16... )

17

18>>> custom_nlp.tokenizer = Tokenizer(

19... nlp.vocab,

20... prefix_search=prefix_re.search,

21... suffix_search=suffix_re.search,

22... infix_finditer=infix_re.finditer,

23... token_match=None,

24... )

25

26>>> custom_tokenizer_about_doc = custom_nlp(custom_about_text)

27

28>>> print([token.text for token in custom_tokenizer_about_doc[8:15]])

29['for', 'a', 'London', '@', 'based', 'Fintech', 'company']

In this example, you first instantiate a new Language object. To build a new Tokenizer, you generally provide it with:

Vocab: A storage container for special cases, which is used to handle cases like contractions and emoticons.prefix_search: A function that handles preceding punctuation, such as opening parentheses.suffix_search: A function that handles succeeding punctuation, such as closing parentheses.infix_finditer: A function that handles non-whitespace separators, such as hyphens.token_match: An optional Boolean function that matches strings that should never be split. It overrides the previous rules and is useful for entities like URLs or numbers.

The functions involved are typically regex functions that you can access from compiled regex objects. To build the regex objects for the prefixes and suffixes—which you don’t want to customize—you can generate them with the defaults, shown on lines 5 to 10.

To make a custom infix function, first you define a new list on line 12 with any regex patterns that you want to include. Then, you join your custom list with the Language object’s .Defaults.infixes attribute, which needs to be cast to a list before joining. You want to do this to include all the existing infixes. Then you pass the extended tuple as an argument to spacy.util.compile_infix_regex() to obtain your new regex object for infixes.

When you call the Tokenizer constructor, you pass the .search() method on the prefix and suffix regex objects, and the .finditer() function on the infix regex object. Now you can replace the tokenizer on the custom_nlp object.

After that’s done, you’ll see that the @ symbol is now tokenized separately.

Stop Words

Stop words are typically defined as the most common words in a language. In the English language, some examples of stop words are the, are, but, and they. Most sentences need to contain stop words in order to be full sentences that make grammatical sense.

With NLP, stop words are generally removed because they aren’t significant, and they heavily distort any word frequency analysis. spaCy stores a list of stop words for the English language:

>>> import spacy

>>> spacy_stopwords = spacy.lang.en.stop_words.STOP_WORDS

>>> len(spacy_stopwords)

326

>>> for stop_word in list(spacy_stopwords)[:10]:

... print(stop_word)

...

using

becomes

had

itself

once

often

is

herein

who

too

In this example, you’ve examined the STOP_WORDS list from spacy.lang.en.stop_words. You don’t need to access this list directly, though. You can remove stop words from the input text by making use of the .is_stop attribute of each token:

>>> custom_about_text = (

... "Gus Proto is a Python developer currently"

... " working for a London-based Fintech"

... " company. He is interested in learning"

... " Natural Language Processing."

... )

>>> nlp = spacy.load("en_core_web_sm")

>>> about_doc = nlp(custom_about_text)

>>> print([token for token in about_doc if not token.is_stop])

[Gus, Proto, Python, developer, currently, working, London, -, based, Fintech,

company, ., interested, learning, Natural, Language, Processing, .]

Here you use a list comprehension with a conditional expression to produce a list of all the words that are not stop words in the text.

While you can’t be sure exactly what the sentence is trying to say without stop words, you still have a lot of information about what it’s generally about.

Lemmatization

Lemmatization is the process of reducing inflected forms of a word while still ensuring that the reduced form belongs to the language. This reduced form, or root word, is called a lemma.

For example, organizes, organized and organizing are all forms of organize. Here, organize is the lemma. The inflection of a word allows you to express different grammatical categories, like tense (organized vs organize), number (trains vs train), and so on. Lemmatization is necessary because it helps you reduce the inflected forms of a word so that they can be analyzed as a single item. It can also help you normalize the text.

spaCy puts a lemma_ attribute on the Token class. This attribute has the lemmatized form of the token:

>>> import spacy

>>> nlp = spacy.load("en_core_web_sm")

>>> conference_help_text = (

... "Gus is helping organize a developer"

... " conference on Applications of Natural Language"

... " Processing. He keeps organizing local Python meetups"

... " and several internal talks at his workplace."

... )

>>> conference_help_doc = nlp(conference_help_text)

>>> for token in conference_help_doc:

... if str(token) != str(token.lemma_):

... print(f"{str(token):>20} : {str(token.lemma_)}")

...

is : be

He : he

keeps : keep

organizing : organize

meetups : meetup

talks : talk

In this example, you check to see if the original word is different from the lemma, and if it is, you print both the original word and its lemma.

You’ll note, for instance, that organizing reduces to its lemma form, organize. If you don’t lemmatize the text, then organize and organizing will be counted as different tokens, even though they both refer to the same concept. Lemmatization helps you avoid duplicate words that may overlap conceptually.

Word Frequency

You can now convert a given text into tokens and perform statistical analysis on it. This analysis can give you various insights, such as common words or unique words in the text:

>>> import spacy

>>> from collections import Counter

>>> nlp = spacy.load("en_core_web_sm")

>>> complete_text = (

... "Gus Proto is a Python developer currently"

... " working for a London-based Fintech company. He is"

... " interested in learning Natural Language Processing."

... " There is a developer conference happening on 21 July"

... ' 2019 in London. It is titled "Applications of Natural'

... ' Language Processing". There is a helpline number'

... " available at +44-1234567891. Gus is helping organize it."

... " He keeps organizing local Python meetups and several"

... " internal talks at his workplace. Gus is also presenting"

... ' a talk. The talk will introduce the reader about "Use'

... ' cases of Natural Language Processing in Fintech".'

... " Apart from his work, he is very passionate about music."

... " Gus is learning to play the Piano. He has enrolled"

... " himself in the weekend batch of Great Piano Academy."

... " Great Piano Academy is situated in Mayfair or the City"

... " of London and has world-class piano instructors."

... )

>>> complete_doc = nlp(complete_text)

>>> words = [

... token.text

... for token in complete_doc

... if not token.is_stop and not token.is_punct

... ]

>>> print(Counter(words).most_common(5))

[('Gus', 4), ('London', 3), ('Natural', 3), ('Language', 3), ('Processing', 3)]

By looking just at the common words, you can probably assume that the text is about Gus, London, and Natural Language Processing. That’s a significant finding! If you can just look at the most common words, that may save you a lot of reading, because you can immediately tell if the text is about something that interests you or not.

That’s not to say this process is guaranteed to give you good results. You are losing some information along the way, after all.

That said, to illustrate why removing stop words can be useful, here’s another example of the same text including stop words:

>>> Counter(

... [token.text for token in complete_doc if not token.is_punct]

... ).most_common(5)

[('is', 10), ('a', 5), ('in', 5), ('Gus', 4), ('of', 4)]

Four out of five of the most common words are stop words that don’t really tell you much about the summarized text. This is why stop words are often considered noise for many applications.

Part-of-Speech Tagging

Part of speech or POS is a grammatical role that explains how a particular word is used in a sentence. There are typically eight parts of speech:

- Noun

- Pronoun

- Adjective

- Verb

- Adverb

- Preposition

- Conjunction

- Interjection

Part-of-speech tagging is the process of assigning a POS tag to each token depending on its usage in the sentence. POS tags are useful for assigning a syntactic category like noun or verb to each word.

In spaCy, POS tags are available as an attribute on the Token object:

>>> import spacy

>>> nlp = spacy.load("en_core_web_sm")

>>> about_text = (

... "Gus Proto is a Python developer currently"

... " working for a London-based Fintech"

... " company. He is interested in learning"

... " Natural Language Processing."

... )

>>> about_doc = nlp(about_text)

>>> for token in about_doc:

... print(

... f"""

... TOKEN: {str(token)}

... =====

... TAG: {str(token.tag_):10} POS: {token.pos_}

... EXPLANATION: {spacy.explain(token.tag_)}"""

... )

...

TOKEN: Gus

=====

TAG: NNP POS: PROPN

EXPLANATION: noun, proper singular

TOKEN: Proto

=====

TAG: NNP POS: PROPN

EXPLANATION: noun, proper singular

TOKEN: is

=====

TAG: VBZ POS: AUX

EXPLANATION: verb, 3rd person singular present

TOKEN: a

=====

TAG: DT POS: DET

EXPLANATION: determiner

TOKEN: Python

=====

TAG: NNP POS: PROPN

EXPLANATION: noun, proper singular

...

Here, two attributes of the Token class are accessed and printed using f-strings:

.tag_displays a fine-grained tag..pos_displays a coarse-grained tag, which is a reduced version of the fine-grained tags.

You also use spacy.explain() to give descriptive details about a particular POS tag, which can be a valuable reference tool.

By using POS tags, you can extract a particular category of words:

>>> nouns = []

>>> adjectives = []

>>> for token in about_doc:

... if token.pos_ == "NOUN":

... nouns.append(token)

... if token.pos_ == "ADJ":

... adjectives.append(token)

...

>>> nouns

[developer, company]

>>> adjectives

[interested]

You can use this type of word classification to derive insights. For instance, you could gauge sentiment by analyzing which adjectives are most commonly used alongside nouns.

Visualization: Using displaCy

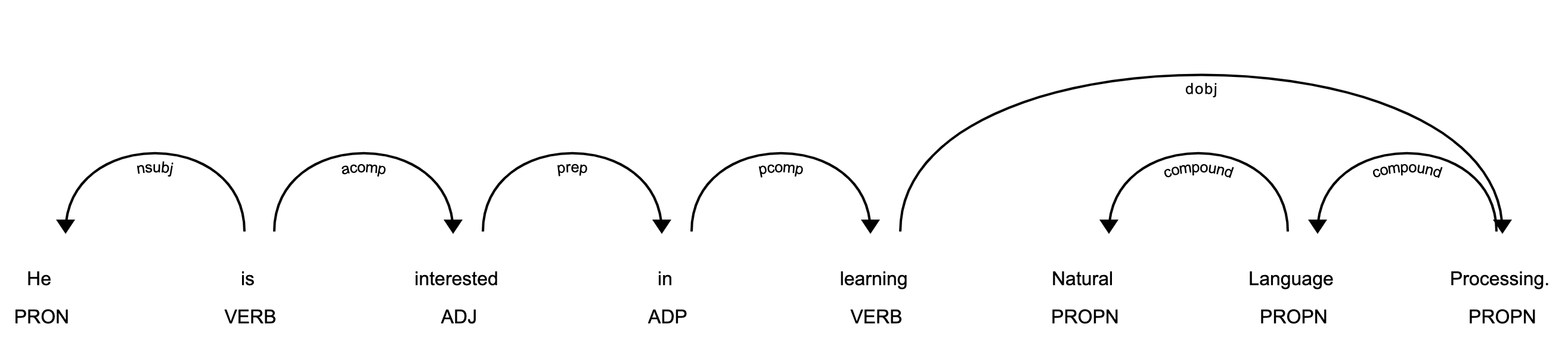

spaCy comes with a built-in visualizer called displaCy. You can use it to visualize a dependency parse or named entities in a browser or a Jupyter notebook.

You can use displaCy to find POS tags for tokens:

>>> import spacy

>>> from spacy import displacy

>>> nlp = spacy.load("en_core_web_sm")

>>> about_interest_text = (

... "He is interested in learning Natural Language Processing."

... )

>>> about_interest_doc = nlp(about_interest_text)

>>> displacy.serve(about_interest_doc, style="dep")

The above code will spin up a simple web server. You can then see the visualization by going to http://127.0.0.1:5000 in your browser:

In the image above, each token is assigned a POS tag written just below the token.

You can also use displaCy in a Jupyter notebook:

In [1]: displacy.render(about_interest_doc, style="dep", jupyter=True)

Have a go at playing around with different texts to see how spaCy deconstructs sentences. Also, take a look at some of the displaCy options available for customizing the visualization.

Preprocessing Functions

To bring your text into a format ideal for analysis, you can write preprocessing functions to encapsulate your cleaning process. For example, in this section, you’ll create a preprocessor that applies the following operations:

- Lowercases the text

- Lemmatizes each token

- Removes punctuation symbols

- Removes stop words

A preprocessing function converts text to an analyzable format. It’s typical for most NLP tasks. Here’s an example:

>>> import spacy

>>> nlp = spacy.load("en_core_web_sm")

>>> complete_text = (

... "Gus Proto is a Python developer currently"

... " working for a London-based Fintech company. He is"

... " interested in learning Natural Language Processing."

... " There is a developer conference happening on 21 July"

... ' 2019 in London. It is titled "Applications of Natural'

... ' Language Processing". There is a helpline number'

... " available at +44-1234567891. Gus is helping organize it."

... " He keeps organizing local Python meetups and several"

... " internal talks at his workplace. Gus is also presenting"

... ' a talk. The talk will introduce the reader about "Use'

... ' cases of Natural Language Processing in Fintech".'

... " Apart from his work, he is very passionate about music."

... " Gus is learning to play the Piano. He has enrolled"

... " himself in the weekend batch of Great Piano Academy."

... " Great Piano Academy is situated in Mayfair or the City"

... " of London and has world-class piano instructors."

... )

>>> complete_doc = nlp(complete_text)

>>> def is_token_allowed(token):

... return bool(

... token

... and str(token).strip()

... and not token.is_stop

... and not token.is_punct

... )

...

>>> def preprocess_token(token):

... return token.lemma_.strip().lower()

...

>>> complete_filtered_tokens = [

... preprocess_token(token)

... for token in complete_doc

... if is_token_allowed(token)

... ]

>>> complete_filtered_tokens

['gus', 'proto', 'python', 'developer', 'currently', 'work',

'london', 'base', 'fintech', 'company', 'interested', 'learn',

'natural', 'language', 'processing', 'developer', 'conference',

'happen', '21', 'july', '2019', 'london', 'title',

'applications', 'natural', 'language', 'processing', 'helpline',

'number', 'available', '+44', '1234567891', 'gus', 'help',

'organize', 'keep', 'organize', 'local', 'python', 'meetup',

'internal', 'talk', 'workplace', 'gus', 'present', 'talk', 'talk',

'introduce', 'reader', 'use', 'case', 'natural', 'language',

'processing', 'fintech', 'apart', 'work', 'passionate', 'music',

'gus', 'learn', 'play', 'piano', 'enrol', 'weekend', 'batch',

'great', 'piano', 'academy', 'great', 'piano', 'academy',

'situate', 'mayfair', 'city', 'london', 'world', 'class',

'piano', 'instructor']

Note that complete_filtered_tokens doesn’t contain any stop words or punctuation symbols, and it consists purely of lemmatized lowercase tokens.

Rule-Based Matching Using spaCy

Rule-based matching is one of the steps in extracting information from unstructured text. It’s used to identify and extract tokens and phrases according to patterns (such as lowercase) and grammatical features (such as part of speech).

While you can use regular expressions to extract entities (such as phone numbers), rule-based matching in spaCy is more powerful than regex alone, because you can include semantic or grammatical filters.

For example, with rule-based matching, you can extract a first name and a last name, which are always proper nouns:

>>> import spacy

>>> nlp = spacy.load("en_core_web_sm")

>>> about_text = (

... "Gus Proto is a Python developer currently"

... " working for a London-based Fintech"

... " company. He is interested in learning"

... " Natural Language Processing."

... )

>>> about_doc = nlp(about_text)

>>> from spacy.matcher import Matcher

>>> matcher = Matcher(nlp.vocab)

>>> def extract_full_name(nlp_doc):

... pattern = [{"POS": "PROPN"}, {"POS": "PROPN"}]

... matcher.add("FULL_NAME", [pattern])

... matches = matcher(nlp_doc)

... for _, start, end in matches:

... span = nlp_doc[start:end]

... yield span.text

...

>>> next(extract_full_name(about_doc))

'Gus Proto'

In this example, pattern is a list of objects that defines the combination of tokens to be matched. Both POS tags in it are PROPN (proper noun). So, the pattern consists of two objects in which the POS tags for both tokens should be PROPN. This pattern is then added to Matcher with the .add() method, which takes a key identifier and a list of patterns. Finally, matches are obtained with their starting and end indexes.

You can also use rule-based matching to extract phone numbers:

>>> conference_org_text = ("There is a developer conference"

... " happening on 21 July 2019 in London. It is titled"

... ' "Applications of Natural Language Processing".'

... " There is a helpline number available"

... " at (123) 456-7891")

...

>>> def extract_phone_number(nlp_doc):

... pattern = [

... {"ORTH": "("},

... {"SHAPE": "ddd"},

... {"ORTH": ")"},

... {"SHAPE": "ddd"},

... {"ORTH": "-", "OP": "?"},

... {"SHAPE": "dddd"},

... ]

... matcher.add("PHONE_NUMBER", None, pattern)

... matches = matcher(nlp_doc)

... for match_id, start, end in matches:

... span = nlp_doc[start:end]

... return span.text

...

>>> conference_org_doc = nlp(conference_org_text)

>>> extract_phone_number(conference_org_doc)

'(123) 456-7891'

In this example, the pattern is updated in order to match phone numbers. Here, some attributes of the token are also used:

ORTHmatches the exact text of the token.SHAPEtransforms the token string to show orthographic features,dstanding for digit.OPdefines operators. Using?as a value means that the pattern is optional, meaning it can match 0 or 1 times.

Chaining together these dictionaries gives you a lot of flexibility to choose your matching criteria.

Note: For simplicity, in the example, phone numbers are assumed to be of a particular format: (123) 456-7891. You can change this depending on your use case.

Again, rule-based matching helps you identify and extract tokens and phrases by matching according to lexical patterns and grammatical features. This can be useful when you’re looking for a particular entity.

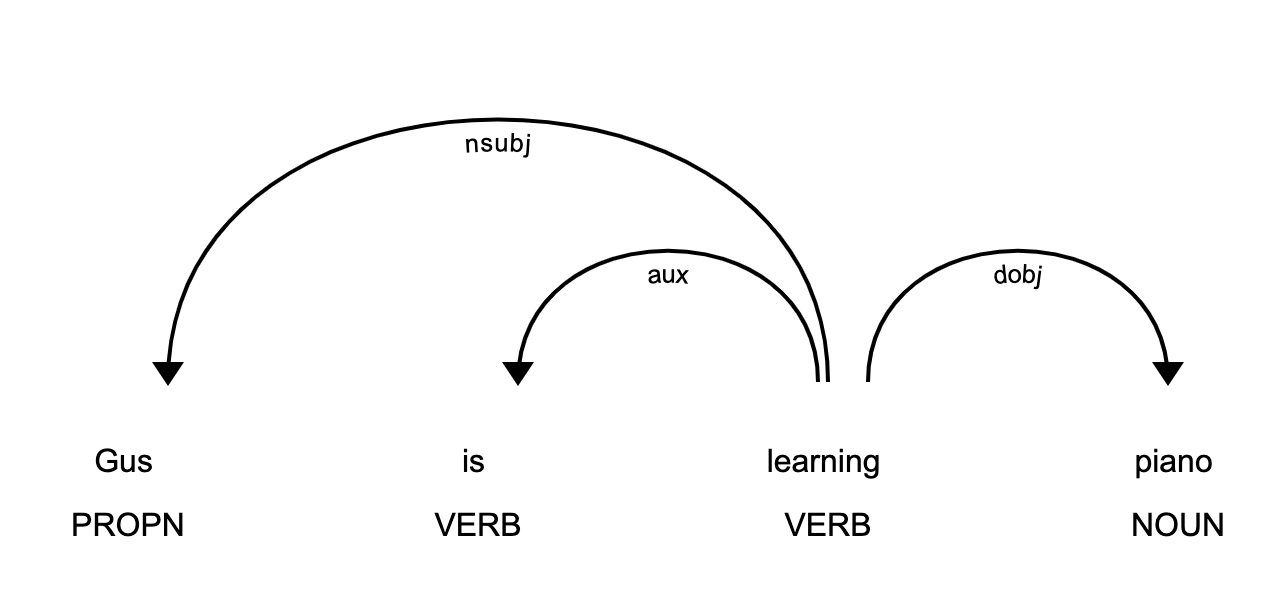

Dependency Parsing Using spaCy

Dependency parsing is the process of extracting the dependency graph of a sentence to represent its grammatical structure. It defines the dependency relationship between headwords and their dependents. The head of a sentence has no dependency and is called the root of the sentence. The verb is usually the root of the sentence. All other words are linked to the headword.

The dependencies can be mapped in a directed graph representation where:

- Words are the nodes.

- Grammatical relationships are the edges.

Dependency parsing helps you know what role a word plays in the text and how different words relate to each other.

Here’s how you can use dependency parsing to find the relationships between words:

>>> import spacy

>>> nlp = spacy.load("en_core_web_sm")

>>> piano_text = "Gus is learning piano"

>>> piano_doc = nlp(piano_text)

>>> for token in piano_doc:

... print(

... f"""

... TOKEN: {token.text}

... =====

... {token.tag_ = }

... {token.head.text = }

... {token.dep_ = }"""

... )

...

TOKEN: Gus

=====

token.tag_ = 'NNP'

token.head.text = 'learning'

token.dep_ = 'nsubj'

TOKEN: is

=====

token.tag_ = 'VBZ'

token.head.text = 'learning'

token.dep_ = 'aux'

TOKEN: learning

=====

token.tag_ = 'VBG'

token.head.text = 'learning'

token.dep_ = 'ROOT'

TOKEN: piano

=====

token.tag_ = 'NN'

token.head.text = 'learning'

token.dep_ = 'dobj'

In this example, the sentence contains three relationships:

nsubjis the subject of the word, and its headword is a verb.auxis an auxiliary word, and its headword is a verb.dobjis the direct object of the verb, and its headword is also a verb.

The list of relationships isn’t particular to spaCy. Rather, it’s an evolving field of linguistics research.

You can also use displaCy to visualize the dependency tree of the sentence:

>>> displacy.serve(piano_doc, style="dep")

This code will produce a visualization that you can access by opening http://127.0.0.1:5000 in your browser:

This image shows you visually that the subject of the sentence is the proper noun Gus and that it has a learn relationship with piano.

Tree and Subtree Navigation

The dependency graph has all the properties of a tree. This tree contains information about sentence structure and grammar and can be traversed in different ways to extract relationships.

spaCy provides attributes like .children, .lefts, .rights, and .subtree to make navigating the parse tree easier. Here are a few examples of using those attributes:

>>> import spacy

>>> nlp = spacy.load("en_core_web_sm")

>>> one_line_about_text = (

... "Gus Proto is a Python developer"

... " currently working for a London-based Fintech company"

... )

>>> one_line_about_doc = nlp(one_line_about_text)

>>> # Extract children of `developer`

>>> print([token.text for token in one_line_about_doc[5].children])

['a', 'Python', 'working']

>>> # Extract previous neighboring node of `developer`

>>> print (one_line_about_doc[5].nbor(-1))

Python

>>> # Extract next neighboring node of `developer`

>>> print (one_line_about_doc[5].nbor())

currently

>>> # Extract all tokens on the left of `developer`

>>> print([token.text for token in one_line_about_doc[5].lefts])

['a', 'Python']

>>> # Extract tokens on the right of `developer`

>>> print([token.text for token in one_line_about_doc[5].rights])

['working']

>>> # Print subtree of `developer`

>>> print (list(one_line_about_doc[5].subtree))

[a, Python, developer, currently, working, for, a, London, -, based, Fintech

company]

In these examples, you’ve gotten to know various ways to navigate the dependency tree of a sentence.

Shallow Parsing

Shallow parsing, or chunking, is the process of extracting phrases from unstructured text. This involves chunking groups of adjacent tokens into phrases on the basis of their POS tags. There are some standard well-known chunks such as noun phrases, verb phrases, and prepositional phrases.

Noun Phrase Detection

A noun phrase is a phrase that has a noun as its head. It could also include other kinds of words, such as adjectives, ordinals, and determiners. Noun phrases are useful for explaining the context of the sentence. They help you understand what the sentence is about.

spaCy has the property .noun_chunks on the Doc object. You can use this property to extract noun phrases:

>>> import spacy

>>> nlp = spacy.load("en_core_web_sm")

>>> conference_text = (

... "There is a developer conference happening on 21 July 2019 in London."

... )

>>> conference_doc = nlp(conference_text)

>>> # Extract Noun Phrases

>>> for chunk in conference_doc.noun_chunks:

... print (chunk)

...

a developer conference

21 July

London

By looking at noun phrases, you can get information about your text. For example, a developer conference indicates that the text mentions a conference, while the date 21 July lets you know that the conference is scheduled for 21 July.

This is yet another method to summarize a text and obtain the most important information without having to actually read it all.

Verb Phrase Detection

A verb phrase is a syntactic unit composed of at least one verb. This verb can be joined by other chunks, such as noun phrases. Verb phrases are useful for understanding the actions that nouns are involved in.

spaCy has no built-in functionality to extract verb phrases, so you’ll need a library called textacy. You can use pip to install textacy:

(venv) $ python -m pip install textacy

Now that you have textacy installed, you can use it to extract verb phrases based on grammatical rules:

>>> import textacy

>>> about_talk_text = (

... "The talk will introduce reader about use"

... " cases of Natural Language Processing in"

... " Fintech, making use of"

... " interesting examples along the way."

... )

>>> patterns = [{"POS": "AUX"}, {"POS": "VERB"}]

>>> about_talk_doc = textacy.make_spacy_doc(

... about_talk_text, lang="en_core_web_sm"

... )

>>> verb_phrases = textacy.extract.token_matches(

... about_talk_doc, patterns=patterns

... )

>>> # Print all verb phrases

>>> for chunk in verb_phrases:

... print(chunk.text)

...

will introduce

>>> # Extract noun phrase to explain what nouns are involved

>>> for chunk in about_talk_doc.noun_chunks:

... print (chunk)

...

this talk

the speaker

the audience

the use cases

Natural Language Processing

Fintech

use

interesting examples

the way

In this example, the verb phrase introduce indicates that something will be introduced. By looking at the noun phrases, you can piece together what will be introduced—again, without having to read the whole text.

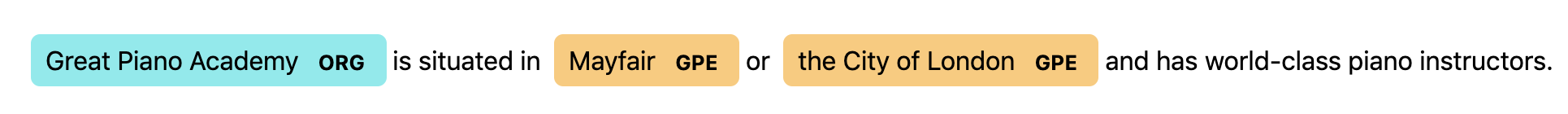

Named-Entity Recognition

Named-entity recognition (NER) is the process of locating named entities in unstructured text and then classifying them into predefined categories, such as person names, organizations, locations, monetary values, percentages, and time expressions.

You can use NER to learn more about the meaning of your text. For example, you could use it to populate tags for a set of documents in order to improve the keyword search. You could also use it to categorize customer support tickets into relevant categories.

spaCy has the property .ents on Doc objects. You can use it to extract named entities:

>>> import spacy

>>> nlp = spacy.load("en_core_web_sm")

>>> piano_class_text = (

... "Great Piano Academy is situated"

... " in Mayfair or the City of London and has"

... " world-class piano instructors."

... )

>>> piano_class_doc = nlp(piano_class_text)

>>> for ent in piano_class_doc.ents:

... print(

... f"""

... {ent.text = }

... {ent.start_char = }

... {ent.end_char = }

... {ent.label_ = }

... spacy.explain('{ent.label_}') = {spacy.explain(ent.label_)}"""

... )

...

ent.text = 'Great Piano Academy'

ent.start_char = 0

ent.end_char = 19

ent.label_ = 'ORG'

spacy.explain('ORG') = Companies, agencies, institutions, etc.

ent.text = 'Mayfair'

ent.start_char = 35

ent.end_char = 42

ent.label_ = 'LOC'

spacy.explain('LOC') = Non-GPE locations, mountain ranges, bodies of water

ent.text = 'the City of London'

ent.start_char = 46

ent.end_char = 64

ent.label_ = 'GPE'

spacy.explain('GPE') = Countries, cities, states

In the above example, ent is a Span object with various attributes:

.textgives the Unicode text representation of the entity..start_chardenotes the character offset for the start of the entity..end_chardenotes the character offset for the end of the entity..label_gives the label of the entity.

spacy.explain gives descriptive details about each entity label. You can also use displaCy to visualize these entities:

>>> displacy.serve(piano_class_doc, style="ent")

If you open http://127.0.0.1:5000 in your browser, then you’ll be able to see the visualization:

One use case for NER is to redact people’s names from a text. For example, you might want to do this in order to hide personal information collected in a survey. Take a look at the following example:

>>> survey_text = (

... "Out of 5 people surveyed, James Robert,"

... " Julie Fuller and Benjamin Brooks like"

... " apples. Kelly Cox and Matthew Evans"

... " like oranges."

... )

>>> def replace_person_names(token):

... if token.ent_iob != 0 and token.ent_type_ == "PERSON":

... return "[REDACTED] "

... return token.text_with_ws

...

>>> def redact_names(nlp_doc):

... with nlp_doc.retokenize() as retokenizer:

... for ent in nlp_doc.ents:

... retokenizer.merge(ent)

... tokens = map(replace_person_names, nlp_doc)

... return "".join(tokens)

...

>>> survey_doc = nlp(survey_text)

>>> print(redact_names(survey_doc))

Out of 5 people surveyed, [REDACTED] , [REDACTED] and [REDACTED] like apples.

[REDACTED] and [REDACTED] like oranges.

In this example, replace_person_names() uses .ent_iob, which gives the IOB code of the named entity tag using inside-outside-beginning (IOB) tagging.

The redact_names() function uses a retokenizer to adjust the tokenizing model. It gets all the tokens and passes the text through map() to replace any target tokens with [REDACTED].

So just like that, you would be able to redact a huge amount of text in seconds, while doing it manually could take many hours. That said, you always need to be careful with redaction, because the models aren’t perfect!

Conclusion

spaCy is a powerful and advanced library that’s gaining huge popularity for NLP applications due to its speed, ease of use, accuracy, and extensibility.

In this tutorial, you’ve learned how to:

- Implement NLP in spaCy

- Customize and extend built-in functionalities in spaCy

- Perform basic statistical analysis on a text

- Create a pipeline to process unstructured text

- Parse a sentence and extract meaningful insights from it

You’ve now got some handy tools to start your explorations into the world of natural language processing.

Free Source Code: Click here to download the free source code that you’ll use for natural language processing (NLP) in spaCy.

Frequently Asked Questions

Now that you have some experience with using spaCy for natural language processing in Python, you can use the questions and answers below to check your understanding and recap what you’ve learned.

These FAQs are related to the most important concepts you’ve covered in this tutorial. Click the Show/Hide toggle beside each question to reveal the answer.

You use spaCy to perform natural language processing tasks, such as tokenization, part-of-speech tagging, named entity recognition, and dependency parsing.

spaCy handles tokenization by breaking down text into its basic units, or tokens, using a built-in tokenizer that considers linguistic rules and punctuation.

Dependency parsing in spaCy involves extracting the grammatical structure of a sentence, identifying relationships between words, and representing them in a dependency graph.

spaCy is often preferred over NLTK for production applications because it emphasizes speed and efficiency, while NLTK is widely used in research and education for its comprehensive set of tools.

spaCy and BERT serve different purposes. spaCy is a general-purpose NLP library, while BERT is a transformer-based model focused on deep learning tasks. You might integrate BERT within spaCy for advanced NLP tasks.